Business Process Re-engineering: A Turning Point in Novell's Imaging Studies

Articles and Tips: article

Senior Research Engineer

Novell Systems Research

BRENNA JORDAN

Research Assistant

Novell Systems Research

01 Jun 1996

The imaging industry has undergone a radical transformation. Organizations are no longer merely buying an imaging system; they are re-engineering their business processes to cut costs and increase productivity. Imaging is now being examined in the context of business process re-engineering (BPR) and the new frontier of application integration. In light of the strong value proposition stemming from the integration of technologies at the desktop, our imaging tests need to reflect more of the "real-world" possibilities for hardware and software. Accordingly, this AppNote describes the rationale behind the mindset change from performance-based testing to GUI-based integration. It also presents our new directions for future testing.

PREVIOUS APPNOTES IN THIS SERIES Mar 96 "New Bottlenecks in LAN-based Imaging Systems" Dec 95 "LAN-based Imaging Revisited" Nov 93 "Multi-Segment LAN Imaging: Departmental Configuration Guidelines" Jul 93 "Multi-Segment LAN Imaging Implementations: Four-Segment Ethernet" May 93 "Imaging Test Results: Retrieval Rates on Single- and Multiple-Segment LANs" Feb 93 "Imaging Configurations Performance Test Results" Jan 93 "Imaging Configurations and Process Testing"

- Introduction

- The Impetus for Change

- Business vs. Technology

- Business Process Re-engineering

- Developing New Test Bench Configurations

- Conclusion

Introduction

Previous articles on LAN-based imaging have attempted to bring our readers up to date on the latest developments in Novell Research's ongoing imaging tests. "LAN-based Imaging Tests Revisited" in the December 1995 issue described the evolution of our test bench from the DOS platform to the Windows 3.x environment. "New Bottlenecks in LAN-based Imaging Systems" in March 1996 focused on new performance issues that were uncovered after our migration to the Windows environment. The NetNote entitled "The Effect of LAN Driver Choice on Client Performance" hinted at some of the results from our client configuration comparisons between the 16-bit NetWare DOS Requester (VLM) and the new 32-bit NetWare Client 32 software.

In the meantime, the imaging industry has undergone a radical transformation. Organizations are no longer merely buying an imaging system; they are re-engineering their business processes to cut costs and increase productivity. Imaging retrieval at the desktop used to be considered a technology unto itself. But in more and more cases, it is being considered as one part of an overall information management system. Previously disparate applications --imaging, document management, workflow, e-mail, and telephony--are being integrated at the desktop and are expected to work together with a minimum of fuss on the end-user's part. Soon, we will see "smart" server objects capable of harmonizing workloads for efficiency in a cooperative processing model.

In light of these business and technology trends, we have decided our tests need to reflect more "real-world" hardware and software configurations. This AppNote represents a significant turning point in our imaging studies. It surveys and reorders where we've been, and describes the new frontier of imaging in the context of business process re-engineering (BPR). It also presents our plan for future testing based on realistic business models.

The Impetus for Change

In the Novell Systems Research lab and in various field trips over the past several years, we have conducted analyses of both the drivers (software) and the driven (hardware) in imaging systems. We have captured, cached, indexed, retrieved, and archived application images. We have tested the effect on performance of various image file types, both at the workstation and at the server. More importantly, we have tried to deliver an up-to-date survey of LAN-based imaging system performance and configuration issues.

At this point, the majority of our testing for DOS and Windows imaging systems performance is completed, and we have released the first of our Client 32 test results. However, we now find ourselves trying to reconcile our previous studies of DOS- and Windows-based imaging systems with the ongoing rampage of technological advances. A quick review of where we've been will help put our discussion in the proper context.

The Onslaught of Technology

A large number of commercial imaging systems have already been implemented. Some of them have a first-generation Windows front-end. Some are suitable for mid-sized departmental use, and some will even allow interdepartmental image access. But imaging is not just image storage and retrieval anymore. Recent offerings include basic workflow capabilities, such as FileABC's integration of imaging with Novell's GroupWise--a fairly straightforward example of communications-based workflow. More complex imaging solutions have sophisticated workflow programming built-in. (See "An Introduction to Network Workflow" in the September 1993 Novell Application Notes for a basic discussion of workflow implementations.)

No matter how simple or complex an imaging system you implement, you will inevitably find yourself facing the endless tide of next-generation hardware and new software versions. Many application vendors have turned to object-oriented programming to better accommodate changing technology. For our own part, we have discovered the advantages of using object-oriented front-ends to ease the pain of constantly upgrading the imaging hardware in our lab. (See the NetNote "Thrash and Trash: Moving Away from Dependence on Legacy Systems with Object-Oriented Interfaces" in the February 1996 issue.)

At the same time, the industry has taken a new but not altogether unpredictable turn towards integrating heterogeneous applications. One of the most alluring promises of Windows has been the potential to combine applications that perform different functions under a common front-end interface. For example, if e-mail and telephony applications were combined in this way, users could retrieve and manage their phone messages from their e-mail in-box. These trends toward object-oriented programming and application integration have had a profound impact on current imaging implementations, as we will discuss in this AppNote.

We have learned a lot from our efforts to stay ahead of the technology curve in our testing labs. As we migrated our test bench from DOS to Windows, and then to an object-oriented approach, our client-side perceptions of performance changed significantly. Likewise, our server-side observations on imaging have revealed large differences in network behavior, beyond image retrieval (performance) issues alone. The following sections summarize the major lessons we learned from examining both sides of the client-server relationship.

Learning from the Client Side

When we first started Windows-based testing of hard-coded image retrievals in 1992, we found that many of our previous DOS-based tools were unusable in the Windows environment. The DOS parts didn't work in conjunction with the Windows parts, and DOS technologies such as batch files were problematic to say the least. Other DOS tools such as HOOKI21.EXE (a TSR for discovering the Interrupt 21 calls being made in DOS-based imaging systems) no longer worked at all. Not even reviews of the application code yielded the insights into Windows that we wanted.

We needed to know how--and more importantly when and for how long--the processes taking place at the client changed the workload characteristics of the entire client-server relationship. However, with inadequate tools it was difficult to determine exactly what a Windows client was doing and when it was doing it. We soon found ourselves questioning the usability of analogous tools in a Windows imaging environment.

The move to object-oriented tools has helped immensely and provided us with our first really viable experience with RAD (rapid application development) in the summer of 1994. We were thrilled with our newfound ability to change databases containing image indexes, or to move from a black-and-white viewer to a color viewer--all in a matter of minutes.

Eventually, we gave up on process analysis altogether and turned instead to client response time as the main indicator of the system's capacity and efficiency. More than any other tool, our object-oriented test program gave us the greatest control to measure client application response times in Windows. We started to use client response as the primary optimization indicator when adjusting parameters or configurations.

During that testing period, we were looking for ways to improve client performance of Windows-based imaging systems. After all, there was no question that the older DOS-based imaging systems, built on basic file and print services, were fundamentally faster and could be highly optimized by adjusting server and client parameters. Beyond upgrading the CPU and adding more RAM, it seemed reasonable to presume that Windows-based systems could be further optimized by techniques such as reducing client polling of the server, increasing the client-side cache, or storing executable objects in RAM.

However, we found there are some aspects of Windows processing that are unchangable. Without the ability to change client software parameters at installation time (or, ideally, to have the client optimize itself), there was no straightforward way to communicate any real client optimization techniques to the technologist.

As we look back now, this need to explore ways of optimizing the performance of Windows systems over DOS systems should have been a clue that something was not right with our thinking. However, the full implications of this realization would not become clear until we considered our new client-response paradigm in light of server requirements.

Lessons from the Server Side

Though our investigative forays into Windows concentrated much of our attention on the client side, we eventually returned to ask questions about how changes in the server CPU and NetWare version would affect client response times. The server side of our testing has always been predominantly performance-based and configuration-oriented. Performance-based means that we wanted to know how much data could be pushed through a server before some new bottleneck appeared. (See "The Past, Present, and Future Bottlenecks of Imaging" in the October 1992 issue, and "New Bottlenecks in LAN-based Imaging Systems" in the March 1996 issue.) Configuration-oriented means we looked to techniques such as adding RAM, upgrading CPUs, and changing network interface boards and drivers on the server to obtain better performance.

Two years ago, our tests were being performed on a DOS-based imaging system in which clients were accessing eight 50KB images per second from a single NetWare server cache--that translates to 28,800 images per hour, or almost 700,000 images per day. We postulated that segmenting the LAN and reducing the number of clients per segment was the answer to high LAN channel bandwidth utilization in this high-usage environment.

The importance of configuration tuning was evident as well. Once you arrive at a suitable configuration, the next step should always be to see if there are any NetWare server or client parameters that can further optimize performance. We were thus prepared to go forward with configuration optimization to provide customers with the high efficiency solutions they need.

However, it became apparent from some ancillary research that very few imaging implementations today are capable of placing this much demand on a NetWare server. Less than one percent of the current installed base of NetWare servers actually perform image retrievals at this rate.

Our initial data showed servers being taxed by our object-oriented Windows application even at low image retrieval rates. For example, Figure 1 shows some early test results measuring server CPU utilization for eight DOS clients compared with six Windows clients.

Figure 1: Comparison of server CPU utilization with DOS vs. Windows clients.

We observed about a 15 percent overall increase in server CPU utilization between the DOS-based tests and the Windows-based tests. That may not seem significant until you realize that this increase was produced with only two additional clients. Even if we wanted to, we couldn't scale up the number of clients per server due to the performance deficiency in the Windows client platform.

As we pursued our testing, we found that, unfortunately, there is no way to accurately predict the performance of a Windows 3.x, Windows 95, or Windows NT client application at high rates of image retrievals. In retrospect, we can see that our reliance on client response time as the bellwether of imaging performance was due solely to our inability to examine, with clarity, what Windows is really doing most of the time. We have since concluded that server optimization is limited by the tester's knowledge of the client operating system processes. The server's performance is affected by the processes of the client in such a way as to preclude any straightforward scalability of client-to-server ratio, at least in large data type applications such as imaging.

Technology Marches On

In its initial implementations, then, Windows imaging performance has proven to be less than adequate. Yet businesses are moving to Windows-based applications in droves. In fact, many have have implemented Windows-based imaging systems and don't seem to notice any performance difficulties. Part of the explanation for this lies is the combination of today's speedy client hardware with the sheer speed and capacity of a NetWare server. The NetWare client-server paradigm is so powerful that it apparently masks the effects of slow running or poorly-written application code--provided, of course, that you can afford the increased hardware costs for both the client and the server.

Looked at in context with the power of NetWare, the real appeal of the GUI environment is the flexibility of the front-end. It is the ability to integrate heterogeneous applications under a common interface. For the many businesses who use Windows and Windows-based imaging applications, this flexibility may be the silver lining shining through the dark clouds.

In 1994, we were implementing object-oriented test programs as a way to stay ahead of the technology curve for network testing in the industry. Changing viewers and image stores were causing coding difficulties, and using objects enabled us to adapt our program more quickly. At the same time, we were watching the market trends and estimating that we should test ahead of the migration wave. That wave was clearly heading toward object-oriented GUI programming, regardless of questionable Windows efficiency.

The important point--and the one we initially missed--is that performance considerations were not driving the move to object-oriented implementations in a GUI environment. Business concerns, not technological ones, were fueling this move.

Business vs. Technology

It is interesting to analyze how technology implementations are decided upon in the typical business environment. Presumably, the process starts with a business problem to be solved or a critical business need to be met. The business managers approach the "technologists" (programmers or systems integrators) with their problem, and the response is usually something like "Sure, we can do that." But at this point, neither the business managers nor the technologists have any idea what the final process will look like. The negotiation proceeds through successive iterations as the two sides hammer out a feasible solution. There is often a painful learning curve to overcome, and it is to the credit of programmers everywhere that production systems get implemented at all.

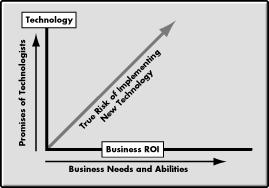

The scary part in all this comes when you look at the graph of implementation risk shown in Figure 2. The more the business needs a good return on investment (ROI) and the more promises given by the technologists, the greater the risk in implementing the actual workable technology.

Figure 2: Estimating business vs. technology risks.

Even optimistic business managers today have become wary of the "we can do that" approach to technology. They recognize that what is good technologically is not necessarily good for the business or for the people involved. This is a fundamental difference between business management and technology management.

Technological Pros and Cons

Object-oriented programming is making the small, "turn-on-a-dime" programming changes possible. But we cannot overlook the difficulties imposed by interpreted object-oriented interfaces. Current object-oriented Windows implementations have side effects which are too slow and complicate server access. Some of the immediate solutions in object-oriented programming indicate a necessary move from interpreted code to compiled code. But at the business economics and technology levels, this is expensive and in many instances means rewriting the object code. The solution may lie in "just in time" (JIT) compilers, which are bringing the speed of interpreted code closer to the speed of compiled code.

We have also seen how the ability to integrate heterogeneous applications, moreso than reusability, cost of resources, and availability of tools, is a main factor driving the move to GUI applications. Yet there are distinct difficulties in integrating heterogenous applications. Getting competing vendors to adhere strictly to the same set of standards is one. (For a good discussion of how hard it is to get vendors to agree on standards, see "A Forest to Be Reckoned With" in Application Software magazine, April 1996, p. 80). Changes in the base operating systems is another. For example, consider the recent spate of applications that will only run under Windows 95, which is causing all kinds of implementation headaches for those whose existing hardware or software is not ready for Windows 95.

Along these same lines, not everybody's network is ready for workflow-enabled, full-color imaging integrated with e-mail and telephony. But complex options such as this are available today, even at the small business end of the spectrum. To further complicate the situation, upgrades of many commercial imaging products are headed in the direction of "one size fits all." If small businesses need a full-blown, distributed image migration and archive tool, their underlying system requirements move up the scale to match those of large interdepartmental and corporate systems. From a configurational point of view, they can no longer be considered small businesses because of their imaging system requirements.

Regardless of these pros and cons, many business managers feel it is more important to have technology that can be migrated and integrated consistently. For large businesses, consistency is the underlying rule of success. It is one of the main reasons why large businesses are moving to cohesive office environments in which technologies are all the same from one time period to another across the business. And that brings us to the focal point of our discussion: business process re-engineering.

Business Process Re-engineering

"Business process re-engineering" is a term that has acquired many connotations over recent years. To some, it carries the same connotation as "downsizing"--now an infamous euphemism for laying off company personnel. The term "right-sizing" has been proposed as being more descriptive of the actual purpose for BPR: to restructure a business's processes and personnel to improve efficiency, while increasing the return on investment.

This AppNote is not about how to do BPR; it is merely observing the business need for technologies which aid in the optimization of business processes, and which lend themselves to examination regarding the overall efficiency of implemenation.

Note that there are two distinct parts involved in process restructuring--the business part and the technology part. We will focus on the technological implementations and implications.

|

Forin-depth information on business processre-engineering,contact the AIIM Bookstore: 1100 Wayne Ave., Ste. 1100Silver Springs, MD 20910(301)587-8202 |

The Way It Was

Five years ago, imaging systems were based on mainframe and minicomputer platforms and cost over half a million dollars at the high end. At the low end, PC-based systems were just starting to become available. These early PC systems offered basic imaging functions--capture, index, store and retrieve, then archive--at a significantly lower cost (in the tens of thousands of dollars).

Back then, the term "re-engineering" was applied in the imaging arena to depict a need for change toward less expensive technology. To existing installations, re-engineering meant tinkering with your business's productivity. To new installations, it meant taking some risks and hoping you'd come out on top. For many businesses, re-engineering was the competitive edge that wiped away their competition. For others, it was the fatal tide that washed them away.

Today, the term "re-engineering" has a more robust connotation. It can encompass many different technologies besides just imaging, such as workflow of various types. In fact, the terms "imaging" and "workflow" are often used to describe the same integrated technologies. As we have seen, most of these are event driven and there is a greater tendency to see objects incorporated into the applications.

Based on our recent research, as well as consumer purchasing trends, we believe the success of any business process re-engineering effort is predicated on the implementer's ability to institute new equipment and change software as easily as possible. Efforts by large companies--such as General Motors and EDS--to institute consistent office environments are vital at the high end of corporate computing.

Generally, businesses want to upgrade to more effective, less costly products. However, new hardware, new software, and new applications are not always seen as more efficient and less expensive by business managers. Even technologists have difficulty justifying the acquisition of new technology, especially at the application level, when the business management and end-user communities perceive the currently implemented technology to be working just fine.

You Are Where You Are!

When contemplating any business process re-engineering decision, it is important to start from your existing hardware platform. The current hardware configuration, along with commercially available software, should be of utmost concern. You should ask yourself questions such as:

Should my business pursue the next generation of imaging products to maintain a competitive edge? What are the advantages the new products offer?

Will a new imaging implementation perform up to my expectations?

What hardware and software configuration will I have to match to obtain the desired performance?

What about integrated solutions and heterogenous application suites? Do they really extend functionality?

These are serious questions for technology directors, implementers, business chiefs, and network administrators alike.

Developing New Test Bench Configurations

In recent months our testing matrix has expanded significantly. We wanted to extend our imaging tests to the NetWare 4 and Novell Directory Services (NDS) environment, and even mix in some tests on the Windows NT platform. That makes for a lot of possible combinations of server versions (NetWare 3, NetWare 4, Green River, and Windows NT Server) and client versions (Windows 3.x, Windows 95, and Windows NT). Needless to say, our original goal--to present performance information based on a few representative imaging systems running under different versions of NetWare with different clients--is in need of a drastic overhaul.

In addition, the observations we have chronicled in this AppNote have led us to reconsider how we evaluate both the hardware and software requirements of our Windows-based imaging implementations. The predominantly heavy bandwidth utilization imposed against our test bench by object-oriented, Windows-based applications and by Windows internal processing caused us real concern for how one might install and later migrate applications. We are now examining that concern with respect to the high-end hardware we were using and the configurations we had implemented in acquiring our test data, versus the implementations our customers were using or considering implementing.

From these concerns, two main factors have emerged as being worthy of consideration:

Are the hardware and software implemenations used in testing both commercially available and implementable by the customer? What value can be added, beyond the existing industry benchmark tests, to enable the customer to obtain the highest return on investment?

Is the test configuration representative of a customer implementation? Can it be migrated to a comprehensive solution which better exemplifies the goals of integrated networked applications?

Together, these two factors cover what we believe to be the direction that businesses and their networks are headed. And we believe the direction of our testing should support the way businesses operate.

To provide truly useful consumer configuration and performance information, it seems appropriate to cover many separate configurations with differing hardware. Yet the wide variety of available hardware encompasses more technology than any one set of configurations or benchmarks could possibly hope to describe adequately. However, if we specify a configuration within a range of similar configurations, consumers have the ability to discern whether their existing configurations are inferior, equivalent, or superior to the test model. Therefore, for testing purposes, we will propose several different test models, with different application software combinations loaded and participating during the tests.

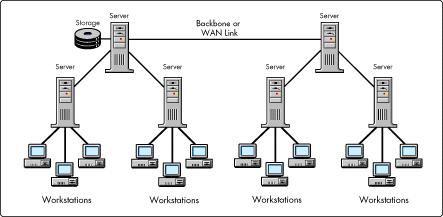

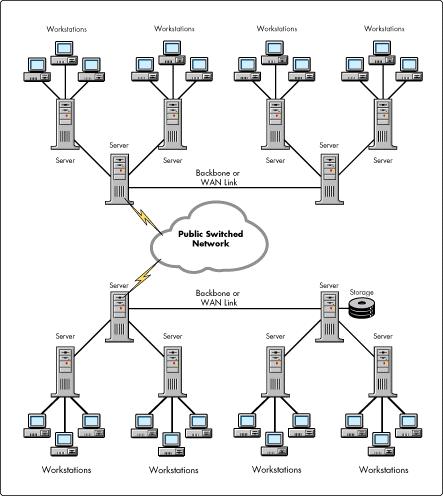

Our current plan is to set up test configurations ranging from the Small Business to the Global Corporation. Representative system diagrams are shown in Figures 3 through 6.

Figure 3: Small business test configuration.

Figure 4: Departmental LAN test configuration.

Figure 5: Enterprise test configuration.

Figure 6: Global network test configuration.

Ideally, not every configuration will use the same combination of software. We do not expect that a small business will be confronted with the complexities of managing a global network while transmitting hundreds of images simultaneously across ISDN and T1 lines. On the other hand, we do expect that a small business might well be running telephony applications as well as communications-based workflow. Hardware configurations will be conceptualized around what has been used in real-world implementations, and what is economically feasible for a particular configuration.

This means our testing will have to be more than benchmarking or limits testing or workload characterization. It will have to encompass factors such as business needs met, adequacy of features, implementability, and potentially some cost reporting. All this with in a frame work of practical and secure business implementation. In any event, our testing can no longer support performance studies in a specific environment.

Conclusion

Our research into LAN-based imaging systems has produced several side benefits. Examinations of object-oriented systems have been particularly helpful, in that they have disclosed significant performance limitations in the client-server model. To surmount these limitations will require increased capacity and performance at the workstation, coupled with an increase in server capabilities. Specifically, we see the need for powerful client hardware with significant RAM, hard disk, and video resources initially, followed by a growing demand for greater computing power and bandwith at the server.

It is important to realize that object-oriented technology and distri-buted objects are still in their infancy in distributed and cooperative processing models. In the next go-round, objects migrated to the server will require more computing power or more dedicated servers. On the other hand, they will make possible a new world of integrated functionality that we can only dream about today.

We have come to the conclusion that, if our future imaging testing is to be useful for the customer, we must be cognizant of real-world implementations and limitations. The general applicability of our previous testing has become muddled by the onslaught of new technology which must be integrated into business processes, if businesses are to achieve an acceptable return on investment.

Accordingly, we have identified a number of new test configuration models for future testing, as described in this AppNote. While these models are not all-encompassing, they should provide comparative milestones for software and configuration migration. Hopefully, they will help you better determine how and when to implement the applications you will need to support your business processes.

* Originally published in Novell AppNotes

Disclaimer

The origin of this information may be internal or external to Novell. While Novell makes all reasonable efforts to verify this information, Novell does not make explicit or implied claims to its validity.