Managing Novell Directory Services Traffic Across a WAN: Part 1

Articles and Tips: article

Consultant

Novell Consulting Services

01 Jun 1996

Novell Directory Services (NDS) was originally designed for enterprise networks with permanent connectivity between remote locations. Dial-up connections to a WAN can cause some concerns about the best way to manage NDS traffic in a switched wide area networking environment. This Application Note presents a general overview of the NDS processes that normally generate network traffic, and then presents recommendations on how to reduce the amount of traffic and minimize bandwidth requirements over a WAN.

RELATED APPNOTES Apr 96 "Ten Techniques to Increase NDS Performance and Reliability" Jan 95 "What's New in NetWare 4.1" Mar 94 "Management Procedures for Directory Services" Apr 93 NetWare 4.0 Special Edition

- Introduction

- NDS Traffic Generators

- Summary of NDS Traffic Generators

- Reducing NDS WAN Traffic

- Summary

Introduction

Novell Directory Services (NDS) was originally designed for enterprise networks with permanent connectivity between remote locations. Dial-up connections to a WAN can cause some concerns about the best way to manage NDS traffic in a switched wide-area-networking environment. To this end, this AppNote presents a general overview of the NDS processes that normally generate network traffic, and then presents recommendations on how to reduce the amount of traffic and minimize NDS bandwidth requirements over a WAN.

The recommendations presented in this Application Note are based on the experiences Novell Consulting Services has gained while supporting customers with many different and challenging switched wide area networks. These customer network configur-ations are as diverse as large ISDN networks with over 100 remote sites, large X.25 networks, and satellite networks with a 1.5-second round trip propagation delay.

Customers consulted fit into one of two general categories. The first category consists of customers with permanent WANs. These customers are generally concerned about reducing the amount of traffic to the minimum amount possible so as to get the best use out of the limited bandwidth on the WAN. Customers whose WANs comprise satellite links are particularly interested in the effects of propagation delay on NDS performance.

The other category of customers includes those with dial-up WANs, particularly ISDN dial-up WANs. For the most part, these customers have the same concerns as customers with permanently linked WANs. In addition, they are interested in the frequency of WAN traffic, because it costs money every time the ISDN line is opened. These customers are also concerned about minimizing connection time, as they are charged while the ISDN line is open.

Until more refined tools for managing NDS traffic on switched networks become available, the knowledge-base gained from supporting these varied WANs is a reasonably good source for making the proper tactical decisions.

This AppNote assumes the reader is familiar with basic NDS concepts and terminology. See the related AppNotes listed on the title page of this AppNote for additional references.

|

Note: All information and tests described in this AppNote arebased on DS.NLM 4.89c. |

NDS Traffic Generators

This section describes the various NDS processes that generate traffic. Not all of the NDS processes are discussed here, only those which generate traffic across the network. These include:

Immediate sync process

Slow sync process

Heartbeat process

Schema sync process

Limber process

Backlink process

Connection management process

Server status check

The discussion of each traffic generator includes examples of how you can view the processes from the server console to monitor the traffic being generated on your own network. A summary of this information is included as a sidebar to this AppNote.

|

Note: Time synchronization also generates traffic on the network.Dealing with time synchronization traffic across a WANlink will be covered in Part 2 of this series. |

Immediate Sync Process

The first NDS traffic generator is the immediate sync process, which is responsible for synchronizing NDS object and attribute changes between replicas. This immediate or "fast" synchronization occurs ten seconds after a change has been made. The 10-second delay time is not configurable. It exists to allow for the accumu-lation of multiple changes to the same object. Changes made to an object within that ten second period are grouped together and sent in a single sync process.

All the servers in the replica ring are involved in the synchroni-zation cycle, including servers that have a master, read/write, read only, or subordinate reference replica. If you have a partition with three replicas, for example, making changes to an object in the master or read/write replicas synchronizes to the other replicas, ten seconds after the change is saved.

To monitor the immediate sync process, type the following commands at the server console:

SET DSTRACE = ON SET DSTRACE = +SYNC SET DSTRACE = +IN

To trigger the synchronization cycle, use NWADMIN to make a minor change to an object in one of the replicas (such as updating a user's phone number). Watch the DSTRACE screen for the synchronization cycle to begin ten seconds later.

Figure 1 illustrates the DSTRACE screen during the immediate synchronization process. In this example, the phone number for the User object User1.Test.Novell has been changed. The object is in the partition named Test.Novell. The master replica of this partition is on server FS1 and a replica is also stored on FS2.

Figure 1: DSTRACE screen showing the immediate synchronization process.

SYNC: Start sync of partition <Test.Novell< state:[0] type:[0]< SYNC: Start outbound sync with (1) [010000B8]<FS2.Novell<< SENDING TO ------> CN=FS2 SYNC: sending updates to server <CN=FS2<< SYNC:[040000BB][(03:49:56),1,1] user1.Test.Novell (User) SYNC: Objects: 1, total changes: 5, sent to server <<CN=FS2<< SYNC: update to server <CN=FS2< successfully completed< SYNC: SkulkPartition for <Test.Novell< succeeded< SYNC: End sync of partition <Test.Novell< All processed = Yes.

LANalyzer Packet Traces. Information for this AppNote was gathered from a small test network consisting of two servers on a network segment. A LANalyzer connected to the segment gathered all the traffic that passed between the two servers. We used Novell's LANalyzer for Windows because, unlike some other network analyzers, it fully decodes NDS traffic. The LANalyzer packet traces give you an exact description of each layer and tell you exactly what the packets are, the names of the objects that have been synchronized, and the processes that were used to synchronize them.

Note that the NDS protocol resides above the NetWare Core Protocol (NCP) layer. Thus a LANalyzer trace shows an IPX header, followed by an NCP header, followed by the NDS header, followed by the NDS data.

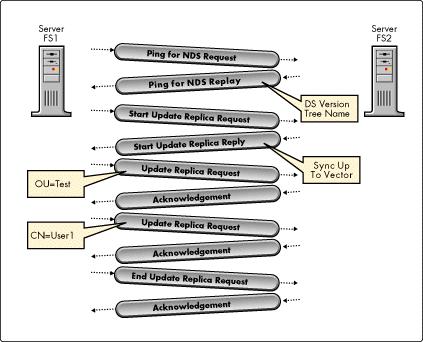

Figure 2 illustrates the NDS synchronization process described above. The LANalyzer packet trace in Figure 3 lists the actual packets that are exchanged during this process.

Figure 2: Packets exchanged during the immediate synchronization process.

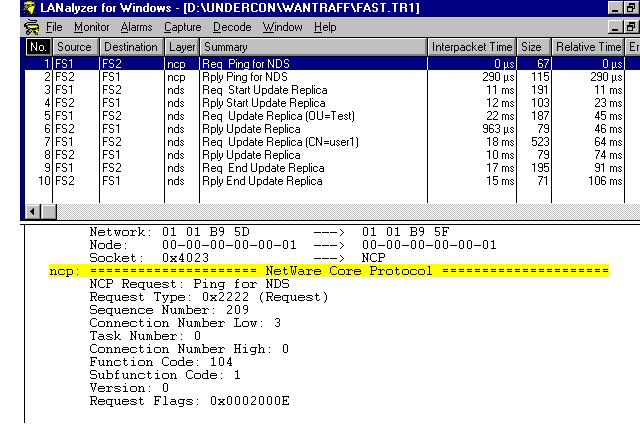

Figure 3: LANalyzer trace of synchronization traffic.

When file server FS1 synchronizes to file server FS2, the first packet appearing on the wire is the PING for NDS (Packet number 1).

File server FS2 responds with the DS version and the tree name (Packet 2).

The next packet from server FS1 is a Start Update Replica request containing the replica name (Packet 3).

Server FS2 responds with its Sync Up To vector (Packet 4).

Server FS1 determines which changes need to be sent to server FS2 and sends them, one object per packet. In this example theo bjects OU=Test and CN=User1 are updated (Packets 5 and 7).

Note: Only "delta" or changed attributes are sent inthese packets.

Each packet is acknowledged by server FS2 (Packets 6 and 8).

After server FS1 has sent all changes to server FS2, server FS1 sends an End Update Replica request containing the new time stamp vector (Packet 9).

The End Update Replica packet is acknowledged by server FS2 (Packet 10).

Slow Sync Process

The slow sync process occurs 22 minutes after making certain changes to an object. The most common changes that trigger the slow sync process occur when a user logs in or logs out. When a user logs in or out, the Login Time, Last Login Time, Network Address, and Revision attributes are updated with the newest information.

The necessity of the slow sync process becomes apparent when you consider what would happen if logins were handled via the immediate sync process. If you had 100 users all logging in at the start of the work day between 8:00 and 8:15 a.m., your network could conceivably grind to a halt as it attempted to synchronize changed login attributes every ten seconds. To handle such situations, the login attributes mentioned above and some other special attributes are synchronized 22 minutes after the change has occurred. The 22 minute delay time is not configurable.

The slow sync process takes effect only in the absence of an immediate sync process. For example, if a user changes his or her password or login script during the 22-minute interval of a slow sync process, an immediate sync process occurs. Queued changes to slow sync attributes are also updated at this time. As long as the network traffic consists only of users logging in and out, you will see a slow sync cycle 22 minutes after the first user logs in.

To monitor the slow sync process, type the following commands at the server console:

SET DSTRACE = ON SET DSTRACE = +SYNC SET DSTRACE = +IN

To trigger the synchronization cycle, log in and make no further changes to the NDS tree. Watch the DSTRACE screen for the synchronization cycle to begin 22 minutes later.

The information displayed on the DSTRACE screen and the LANalyzer trace of the packets are similar to those shown above for the immediate sync process. As with the immediate sync process, the slow sync process synchronizes to all servers in the replica ring, including servers that hold the master, read/write, read only, and subordinate reference replicas.

Heartbeat Process

To ensure the consistency of objects within a replica ring, each server having at least one replica performs a heartbeat process every 30 minutes. This heartbeat occurs regardless of whether or not an immediate sync or slow sync has occurred in the interim.

To watch a heartbeat occur, type the same commands at the console which you typed to test an immediate sync process:

SET DSTRACE = ON SET DSTRACE = +SYNC SET DSTRACE = +IN

You can then force the heartbeat process by typing the following command at the server console:

SET DSTRACE = *H

Figure 4 shows an example of a DSTRACE screen during the heartbeat process. In this case, the server performing the heartbeat holds the master copy of the [ROOT] partition. Server CN=FS2.O=NOVELL also holds a replica of the [ROOT] partition.

Figure 4: DSTRACE screen showing the heartbeat process.

(96/05/22 03:28:27)

SYNC: Start outbound sync with (2) [010001D8]<FS2.Novell<<

SENDING TO ------> CN=FS2

SYNC: sending updates to server

SYNC: update to server successfully completed

users are active on the network. Day time, night time, weekends, holidays--it happens every 30 minutes regardless.

If a server holds more than one replica, the heartbeat cycle synchronizes all of the replicas on the server. (By contrast, in the case of an immediate sync only the replica in which the change occurred takes part in the sync cycle.) Read-only and subordinate reference replicas participate in the heartbeat process to preserve consistency across the partition.

Schema Sync Process

The schema sync process performs two important functions. First, it ensures that the schema is consistent, similar to the way the heartbeat ensures that objects are consistent. Second, it synchronizes changes to the schema partition. Because all servers within an NDS tree must have an identical schema, extensions to the schema require that information to be synchronized to all other servers in the tree. By default, the schema sync process occurs every 240 minutes, but it is configurable.

To see how the schema sync process operates, type the following command at the server console:

SET DSTRACE = +SCHEMA

To force the schema sync process to occur immediately instead of waiting for up to 240 minutes, type at the serer console:

SET DSTRACE = *SS

You can then monitor the traffic associated with the schema sync process. You can also see which servers are involved.

Figure 5 illustrates the DSTRACE screen on a server performing the schema sync process to server CN=FS2.O=NOVELL.

Figure 5: DSTRACE screen showing the schema sync process.

(96/05/22 04:06:22)

SCHEMA: Beginning Schema Synchronization.

------> START schema update to <FS2.Novell<.<

SCHEMA LOCAL:

(03:48:19), 1, 0

(03:48:19), 1, 0

(04:06:22), 2, 0

SCHEMA REMOTE:

(04:06:21), 1, 0

(04:06:21), 1, 0

(04:05:59), 2, 0

Sending DELETED CLASSES . . .

Sending DELETED ATTRIBUTES . . .

Sending PRESENT ATTRIBUTES . . .

Sending PRESENT CLASSES . . .

------> END schema update.

(96/05/22 04:06:22)SchemaPurger: Processing deleted Classes

(96/05/22 04:06:22)SchemaPurger: Processing deleted Attributes

SCHEMA: All Processed = Yes.

replicas, but servers without any replicas as well (since all NetWare 4.1 servers hold a copy of the schema). To determine which servers participate when a server decides to synchronize its schema, NDS use a dynamically-built "poll list." When you run the DSTRACE command with the switches listed above, you may see messages relating to servers being added to or removed from the poll list.

Basically, what's happening here is this. The source server reads all of the replica pointer tables and makes a list of potential target servers to receive the schema updates. This list includes servers that have no replicas stored on them. The source server then pings each target server in the list to determine how far down in the tree its root-most replica is. If the replica depth of the target server is less than the source server's depth, that server is dropped from the list.

Thus servers which have a copy of the root partition will probably have to communicate with many other servers in the tree during a schema synchronization cycle. Servers with replicas that are at the lower branches of the tree will most likely sync to a just few servers in their own replica ring. Of course, this depends on how you have placed partitions and replicas within the NDS tree.

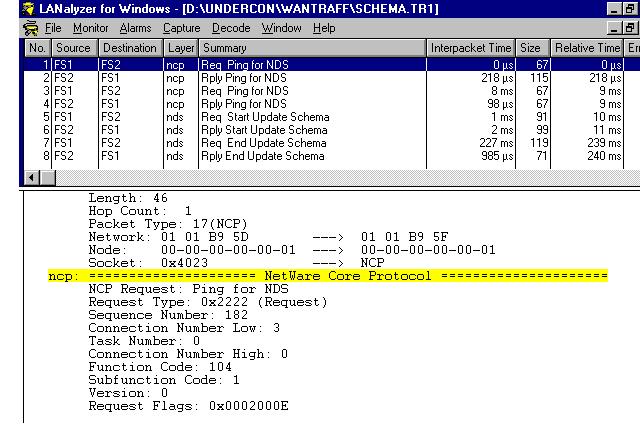

Figure 6 is a LANalyzer trace of the schema sync process between server FS1 and server FS2.

Figure 6: LANalyzer trace of the schema sync process.

As you will notice, the sequence of traffic is almost identical to the immediate synchronization process. Packets 1 to 4 are Ping for NDS requests and responses. Packet 5 is the Start Update Schema request, which is acknowledged by FS2 in Packet 6. In this example there were no changes to send, so Packets 7 and 8 indicate the end of the schema sync initiated by FS1.

Limber Process

The limber process is responsible for updating all replica pointer tables when a server changes its name or address. A server's name or IPX internal address can be changed in the AUTOEXEC.NCF file, but the changes do not take effect until the server is restarted. When a NetWare 4.1 server is started, the limber process checks to see whether the server's distinguished name or IPX address have changed. If so, the limber process updates the information in each replica pointer table in the server's partition. This process also checks to make sure the server has the correct tree name.

The limber process runs five minutes after a NetWare server is restarted and its NDS database is opened. Thereafter, the limber process runs at regular intervals of 180 minutes (not configurable). It is also triggered whenever a server address is added, changed, or deleted. (For example, this might occur when an address is added for another protocol while the server is running.)

Type the following console command to monitor the limber process when it is running.

SET DSTRACE = +LIMBER

If you don't want to wait for up to 180 minutes for the limber process to start on its own, type the following console command to force the process to run:

SET DSTRACE = *L

Figure 7 illustrates the DSTRACE screen of a server holding a read/write copy of the [ROOT] partition during the limber process. In this example, the tree name is DUS-LAB-TEST-TREE.

Figure 7: DSTRACE screen showing message that appear during limber process.

Limber: start connectivity check Limber: Checking at 1 of 1, type=0, len=C Address: 9111194F0000000000010451 Limber: referral was in the same tree: <DUS-LAB-TEST-TREE<< Limber: checking address of replica [Root]: OK! Limber: end connectivity check, all OK!

Notice on the DSTRACE screen that the process is divided into two subprocesses. The first is called "Limber" and verifies the server name and IPX address. The second is called "Lumber" and checks the tree name.

The traffic you would see on the wire for the Limber subprocess is as follows:

Ping for NDS version / reply with DS.NLM version

Resolve server name / reply (update OK)

Resolve internal IPX address / reply (update OK)

If the changes occur on the server holding the master replica of the partition, the limber process changes its local address in the replica pointer table. If the changes occur on a server holding a non-master replica, the limber process contacts the server with the master replica.

The Lumber subprocess is directed at the server holding the master replica of the root partition, since that is where a tree name modification is performed. This server is responsible for sending the name change to all other servers in the tree, using the limber process to perform the updates.

Backlink Process

In NDS, backlinks keep track of external references to objects on other servers. The backlink process defines two tasks:

For each external reference on a server, the backlink process ensures that the real object exists and is in the correct location.

The backlink process verifies all backlink attributes (only on the master of the replica).

The backlink process occurs two hours after the database is open and then every 780 minutes (13 hours). The interval is configurable from 2 minutes to 10,080 minutes (7 days).

Type the following console command to monitor the backlink process when it is running:

SET DSTRACE = +BLINK

If you don't want to wait for the backlink process to start on its own, type the following command to force the process to run:

SET DSTRACE =*B

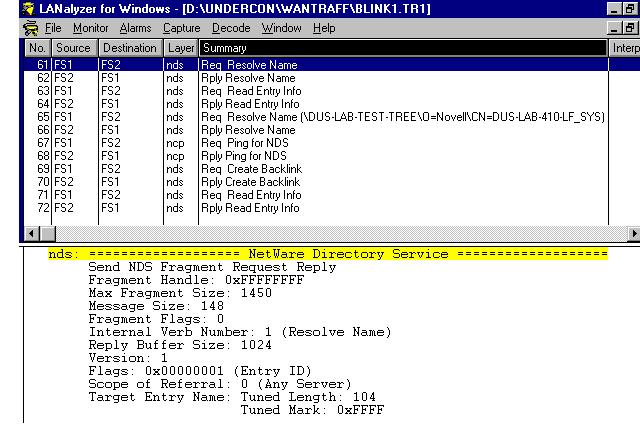

As an example of how the two parts of the backlink process operate, consider this scenario. Server 1 has an external reference to an object, and Server 2 has a writeable copy of that object. (A writeable copy of an object is one in a master or read/ write replica.) When the backlink process runs, a packet exchange occurs as illustrated in Figure 8.

Figure 8: External reference traffic when synchronizing to the closest writeable copy of the object.

First, the name of the external reference is resolved to find any server with a copy of the object, and server FS2 responds (Packets 61 and 62).

Server FS1 reads the full distinguished name of the object from FS2 (Packets 63 and 64).

FS1 again resolves the name of the external reference, this time to a server holding a master or read/write copy of the object. In our example FS2 holds the master replica of the partition, so it responds (Packet 65 and 66).

Server FS1 pings FS2 for the NDS version (Packets 67 and 68).

Server FS1 requests to create a backlink for the external reference and FS2 responds with the object class (Packets 69and 70).

Finally, FS1 reads the distinguished name of the object on FS2 (Packets 71 and 72).

This same sequence of events occurs for each external reference on Server FS1.

The backlink process tries to find the closest master or read/write replica containing the object. The closest copy is based on the IPX tick count, not on the IPX hop count, from the perspective of the server holding the external reference.

With this being the case, you might worry that if a server has a large number of external references, it will jump from server to server all over the tree and generate a lot of traffic. In reality, the backlink process is more orderly, verifying backlinks one server at a time. For example, suppose the backlink process discovers it has 500 external references to check: 100 on each of five other servers. It will first gather together the 100 objects for Server 2 and perform all those checks in one batch. Once those checks are confirmed, the backlink process moves on to the checks for Server 3, then Server 4, and so on. This batch approach ensures that the backlink process doesn't randomly jump around the network.

The second part of the backlink process takes place only on the server with the master copy of thepartition, to make sure the external reference exists. The NDS Backlink attribute lists the name of the server holding the external reference and the object ID of the external reference. The backlink process checks this attribute to verify the correct location of objects' external references.

Figure 9 shows the LANalyzer trace for this part of the backlink process.

Figure 9: Backlink attribute traffic when synchronizing to the server with the external reference. when synchronizing to the server with the external reference.">

This process first generates a ping for the NDS version (Packets 1 and 2).

This is followed by a Resolve Name request and reply for each backlink attribute. In this example, server FS2 verifies six different backlink attributes (Packets 3, 5, 7, 9, 11, and 13). Since the attributes point to external references on server FS1, FS1 responds to each Resolve Name request (Packets 4, 6, 8, 10,12, and 14).

|

Note: Because an object may have external references on severalservers, the backlink attribute may be multi-valued (storemore than one value). |

Connection Management Process

The connection management process is triggered whenever a server-to-server connection has to be created. This process exists to build connections so servers can send NCP packets to each other. Since NCP requires a connection number in order to send NDS related changes to a server, a server has to have a connection number before it can create a connection.

These "virtual client" connections are also used for security, to prevent servers that are not part of the tree from writing changes into NDS. (See the NetNote "Understanding the DS Client" in the April 1996 Novell Application Notes for more information about this type of connection between NetWare 4.x servers.)

If such a connection is lost, it will eventually be re-established. The process that establishes server-to-server connections for NDS is part of the connection management process.

These server-to-server connections are "authenticated but not logged in" connections; they do not consume user licenses. To see these connections from other servers in the tree, load the MONITOR utility on any server and select the Connections option.

To see the connection management process as it occurs, type:

SET DSTRACE = +VCLIENT

Connections will always exist between servers in a replica ring. Connections may also be created between servers due to the schema and backlink processes. (Some connections may also created by time synchronization. This topic will be covered in Part 2 of this series.)

|

Note: All connections created in NetWare, including these server-to-server connections, are maintained by the Watchdog process. |

Server Status Check Process

The following real-life experience shows the importance of the final traffic generator process: the server status check process. A customer had placed a server with no replicas in a remote location, thinking that this would prevent NDS synchronization traffic from being sent across the WAN link. I quickly pointed out one problem with this approach: when users log in, they have to build connections across the WAN. The customer reasoned that the users could live with the initial slowness during login, and once they're logged in, there would be no heartbeat synchronization traffic to deal with. When I asked what he planned to do about backlink process traffic, he replied that it only occurs every 780 minutes and he could handle that. Of course, the schema sync process still posed a problem.

Upon closer examination of this configuration in the lab, I discovered that if you have a server with no replicas on it, that server will check its server status every 360 seconds. The check will be directed to the server holding the closest writable copy of the server object. At the physical level, four packets are exchanged: a Resolve Server Name request and response, and a Read Server Status request and acknowledgement. This traffic is directed to the closest server holding a master or read/write replica containing the server object.

So while at first glance placing servers with no replicas in a remote location might appear to be a good way of reducing network traffic, be very cautious with this approach. The server will want to check its status at regular intervals to ensure that it is still in the tree. Consider also the unavoidable backlink process and schema sync process traffic.

Summary of NDS Traffic Generators

|

Process |

Function |

Frequency |

Viewing |

Forcing |

|

Immediate Sync |

Synchronizes critical changes |

10 seconds after a change is saved |

SET DSTRACE=ONSET DSTRACE=+SYNCSET DSTRACE=+IN |

Create an object or change an attribute |

|

Slow Sync |

Synchronizes non-critical changes |

22 minutes after a change |

SET DSTRACE=ONSET DSTRACE=+SYNCSET DSTRACE=+IN |

Log in or log out;wait 22 minutes |

|

Heartbeat |

Ensures replica consistency |

30 minutes(configurable) |

SET DSTRACE=ONSET DSTRACE=+SYNCSET DSTRACE=+IN |

SET DSTRACE=*H |

|

Schema Sync |

Ensures schema consistency |

240 minutes (configurable), or when schemachanges |

SET DSTRACE=+SCHEMA |

SET DSTRACE=*SS |

|

Limber |

Checks Server object for changes to server name or IPX address |

180 minutes, or when a server address is added, changed, or deleted |

SET DSTRACE=+LIMBER |

SET DSTRACE=*L |

|

Backlink |

External reference consistency |

780 minutes; configurable between 2 and10,080 minutes (7 days) |

SET DSTRACE=+BLINK |

SET DSTRACE=*B |

|

Connection Management |

Creates server-to-server connections |

N/A |

SET DSTRACE=+VCLIENT |

N/A |

|

Server Status |

Status check for servers with no replica |

360 seconds |

N/A |

N/A |

Other Sources of Network Traffic

There are many other sources of traffic that need to be recognized and controlled in switched WAN environments. These include serialization packets, watchdog packets, SPX keep-alive packets, queue polling, mapped drive polling, and so on. It is outside the scope of this AppNote to cover methods for controlling this traffic. These topics have been partially covered in previous AppNotes:

"Configuring Asynchronous Connections with the NetWareMulti Protocol Router 3.0 Software" (July 1995, p. 41)

"Interconnecting NetWare Networks with ISDN" (March 1996, p. 23)

Reducing NDS WAN Traffic

Now that we know what processes in NDS are causing traffic, we can more effectively discuss ways to reduce NDS and time sync traffic over WANs. This section describes some of the solutions you should consider when designing or configuring a network.

Tree Design

Thoughtful design of your NDS tree is one of the key ways to reduce the amount of NDS synchronization traffic that is generated. To cover this subject in detail will take many pages of explanation to get through the details. For now, we refer you to "Universal Guidelines for NDS Tree Design" on page 17 of the April 1996 Novell Application Notes. A more comprehensive treatment will be forthcoming in a future AppNote.

PINGFILT.NLM

PINGFILT.NLM, a tool originally developed as part of the Novell Consulting Services Toolkit, is now shipping with the NetWare MultiProtocol Router software. When loaded on a server, this NLM stops the server from sending outbound NDS traffic. It is configurable on a per-server basis via the DSFILTER.NLM configuration tool. For instance, you can tell Server 1 to not send any NDS traffic to Server 2, but do send server NDS traffic to Server 3. PINGFILT does not affect inbound traffic.

Naturally, you don't want to stop NDS traffic forever, so you can configure the filter to let NDS traffic pass at a particular time of day and day of the week. NDS changes only propagate when the filter is open. If changes are made while the filter is blocking NDS traffic, the updates will be queued on the server where the changes were written and will not be written to the other replicas until the filter is open.

Effective filtering dictates that you load PINGFILT.NLM on all servers in your tree. This is required because you have to take into consideration the backlink process, the limber process, and the schema sync process.

|

Caution: PINGFILT also filters traffic necessary for NDS treewalking. If you configure a server to communicate withno other server in the tree, you can only get theinformation from that local server. If you try to walk upor down the tree from information not stored on theserver, you will get -626, -632 or -663 errors inDSTRACE. |

Although you may want to stop all NDS traffic between servers, the servers must communicate occasionally to preserve consistency in the tree. The filter should be open a minimum of once per working day to allow the link to be open long enough for a heartbeat, a schema sync, and a backlink process to occur.

|

Caution: All filters should be open when you are performingNDS partition operations such as splits and joins. |

SET Commands

There are several SET commands for controlling the time interval between NDS processes. These set commands are configurable via the SERVMAN.NLM utility on a per-server basis. The following table lists these SET commands with their default values and ranges. (Note that the two commands listed in boldface are previously undocumented.)

|

Command |

Description |

Default |

Value Range |

|

SET DSTRACE=ONSET DSTRACE=*P |

Displays the current settings of all DS timeouts andconfiguration parameters on the DSTRACE screen. |

N/A |

N/A |

|

SET NDS INACTIVITY SYNCHRONIZATION INTERVAL = <value< |

Configures how often the heartbeat process occurs. |

30 min. |

2 - 1440 |

|

SET NDS BACKLINK INTERVAL=<value< |

Configures how often the backlink process runs. |

780 min. |

2 - 10,080 |

|

SET DSTRACE=!I<value< |

Controls how often the schema sync process runs. |

240 min. |

N/A |

|

Note: Increasing these three time intervals reduces thefrequency of background traffic generated by NDS.However, the processes are responsible for checkingconsistency of the NDS tree. Increasing these intervalsmay increase the overall time before inconsistency inthe NDS tree is detected. |

NDS Timeouts

On the DSTRACE screen, -625 messages occur when two servers cannot communicate. These messages are normal if a file server, router, or WAN is down. In some cases, however, you may find that -625 errors persist even when the WAN is open and the server is up. In effect, this indicates a timeout situation, similar to when a client times out after a NetWare server doesn't respond within a specified amount of time.

You can configure the NDS timeout with the following SET command:

SET NDS CLIENT NCP RETRIES=<value<

The default is to retry 3 times, but the value is configurable between 1 and 20. (This SET command is equivalent to the DSTRACE option SET DSTRACE=!X<value<.)

|

Caution:In 99.9% of cases, there is no need to change thisparameter. The only time you may have to change is ifyou are getting persistent -625 error messages, andyou know the WAN and the routers are up. If theremote server is not available, the server takes muchlonger before it times out and goes to the next server. |

This parameter is configurable only on a per-server basis.

|

NDS Checksums Although this feature will not reduce NDS traffic, DS.NLM version 4.96 andabove has the additional functionality of performing a checksum on all server-to-serverNDS traffic. This adds some additional reliability to data transfer, especiallyover a WAN. See "Enabling Request-IPX-Checksums to Eliminate NDS Packet Corruption Problmens" on page 83 of the April 1996 Novell Application Notes for details. |

Manual Dial-up Lines

One way of controlling NDS traffic over dial-up ISDN links is to open and close the ISDN lines manually. The advantage of this solution is that you are in control of when the lines are opened and closed and, therefore, the ISDN costs. (Note that downed links generate a -625 message on the DSTRACE screen.)

The disadvantage of this solution is that manual intervention is required to open and close the lines. This may be very time consuming. Also, when changes are made to NDS, they will not be propagated across the WAN until the line is reopened.

The lines must be open at least once per working day. The link should be left open long enough for a heartbeat, schema, and backlink process to complete.

|

Caution: All lines must be open when you are performingpartition operations such as splits and joins. |

Multiple Trees

Another possible way to control NDS traffic is to use separate trees for each location connected across the WAN. Novell's NetWare Client 32 software fully supports NDS logins to multiple trees. With the VLM client, you can do an NDS login into one tree and make a bindery attachment to the server(s) you want in the remote trees.

Having separate trees for each WAN location eliminates traffic on your WAN, but the tradeoff side is more difficult configuration for users and more management overhead.

NDS Spoofing in Routers

Several established Novell OEM partners, including AVM of Berlin and ITK of Dortmund, Germany, have addressed the problem with NDS spoofing solutions for their ISDN routers. When these ISDN routers receive an NDS packet destined for the remote location, they respond with a "-663 DS is locked" message. Users can then configure the time when they want the NDS traffic to pass through the spoofed link. These solutions are proprietary to the hardware-specific ISDN drivers.

Upcoming Solutions

In recent BrainShare conferences, Novell has outlined several upcoming enhancements to NDS that hold promise in helping to reduce synchronization traffic over WANs:

A WANMAN.NLM file for establishing policy-based filtering for NDS traffic

Federated Partitions, a method of producing the traffic characteristics and administrative controls of separate trees in a single tree

An enhanced NWADMIN utility which allows concurrent management of multiple trees

Summary

This Application Note has reviewed the traffic generating processes of NDS. It has also outlined several available methods of managing these processes to reduce the amount of synchronization traffic going across WAN links. Part 2 of this series will examine time synchronization traffic and present ways to manage this type of traffic across a WAN.

* Originally published in Novell AppNotes

Disclaimer

The origin of this information may be internal or external to Novell. While Novell makes all reasonable efforts to verify this information, Novell does not make explicit or implied claims to its validity.