New Bottlenecks in LAN-based Imaging Systems

Articles and Tips: article

Senior Research Engineer

Novell Systems Research

BRENNA JORDAN

Research Assistant

Novell Systems Research

01 Mar 1996

The migration from DOS to Microsoft Windows as an imaging platform has uncovered new issues that add to what has been previously published on LAN-based imaging. Tests at Novell have identified the base operating system as a key factor in imaging system performance. Recent testing has turned up other critical factors, besides the operating system, that contribute to networked image retrieval performance. The prime focus of this AppNote is on the type of imaging system deployed. As demonstrated by our recent test results, the issue of whether the imaging system is based on compiled code or interpreted code plays a significant role in network performance.

PREVIOUS APPNOTES IN THIS SERIES Dec 95 "LAN-based Imaging Revisited" Nov 93 "Multi-Segment LAN Imaging: Departmental Configuration Guidelines" Jul 93 "Multi-Segment LAN Imaging Implementations: Four-Segment Ethernet" May 93 "Imaging Test Results: Retrieval Rates on Single- and Multiple-Segment LANs" Feb 93 "Imaging Configurations Performance Test Results" Jan 93 "Imaging Configurations and Process Testing" Oct 92 "The Past, Present, and Future Bottlenecks of Imaging" Jul 92 "The Hardward of Imaging Technology" May 92 "Issues and Implications for LAN-Based Imaging Systems"

- Introduction

- A New Imaging Test Bench

- Tracking Down New Bottlenecks

- Compiled vs. Interpreted Code

- Conclusion

Introduction

Few computing processes are more rigorous than multiple users performing large numbers of image retrievals over a network. Whether the image retrievals occur in a small office or on a corporate network, system designers and integrators must carefully analyze factors that contribute to network performance, as well as potential bottleneck areas.

In 1992, Novell Research published an Application Note that addressed specific bottleneck issues facing LAN-based imaging environments (see "The Past, Present, and Future Bottlenecks of Imaging" in the October 1992 Novell Application Notes). This was a fairly comprehensive article at the time, pointing out perennial bottlenecks such as CPU, memory, optical storage limitation, hard disk access, caching, and network bandwidth. It also predicted future performance hurdles such as application design and increased load on the server.

The migration from DOS to Microsoft Windows as an imaging platform has uncovered new issues that must be appended to our previous bottleneck article. The AppNote entitled "LAN-based Imaging Revisited" in the December 1995 issue presented our conclusion that the base operating system was a key factor in image retrieval performance. Recent testing has turned up other critical factors, besides the operating system, that contribute to networked image retrieval performance. The prime focus of this AppNote is on the type of imaging system deployed. As demonstrated by our recent test results, the issue of whether the imaging system is based on compiled code or interpreted code plays a significant role in network performance.

A New Imaging Test Bench

Before we jump into a discussion of imaging system types, it will be helpful to review the benchmark tests we have used in our imaging research. Our earliest run of image testing was performed under the DOS environment. This hard-coded test bench, called MUTEST, retrieved four unique images, with a total of ten image seeks per workstation. We found several distinct advantages to implementing a DOS-based imaging system. However, the industry moved on and embraced the graphically-oriented Windows interface. Accordingly, we migrated our test benches to run under Windows.

Our second run of tests was performed in the Windows environ-ment. IMGTEST was a front-end, graphical, object-oriented test bench that retrieved images over the LAN channel. Our experience with IMGTEST brought up several Windows-based anomalies, many of which are still to be identified and explained. However, our main quest was to increase the end-user's knowledge on networked image retrieval implementation schemes, specifically in the Windows environment.

Our latest test bench was created by FileABC from the foundation of their commercially-available image retrieval databasing system. This test bench, which we refer to as FILEABC, is a fully compiled test bench that deploys the same image retrieval paradigm and hardware configuration routines as our previous IMGTEST test bench.

The following table compares our imaging test configurations.

|

Test Bench |

MUTEST |

IMGTEST |

FILEABC |

|

Operating System |

DOS |

Microsoft Windows 3.1 |

Microsoft Windows 3.1 |

|

Server |

Compaq SystemPro486/33 MHZ40 MB RAM11GB Micropolis Disk ArrayNOS: NetWare 3.12NE3200 NICNE2000 NIC(Same as IMGTEST & FILEABC) |

Compaq SystemPro486/33 MHZ40 MB RAM11GB Micropolis Disk ArrayNOS: NetWare 3.12NE3200 NICNE2000 NIC(Same as MUTEST & FILEABC) |

Compaq SystemPro486/33 MHZ40 MB RAM11GB Micropolis Disk ArrayNOS: NetWare 3.12NE3200 NICNE2000 NIC(Same as IMGTEST & MUTEST) |

|

Workstations |

8 WorkstationsDell 386/25NE2000 NIC to oneSynoptics 10BASE2 Concentrator |

18 WorkstationsDell 386/25 NE2000 NIC divided to three Eagle 10BASET Hubs(Same as FILEABC) |

18 WorkstationsDell 386/25 NE2000 NIC divided to three Eagle 10BASET Hubs(Same as IMGTEST) |

|

Images and Files |

Files and images on server4 Unique Images10 Image SeeksMedium Quality Images: 8.5" by 11" documents scanned at 150 DPI in TIFF format; non-color images |

Files and images on server10 Unique Images200 Image SeeksMedium Quality Images: 8.5" by 11" documents scanned at 150 DPI in TIFF format; non-color images (Same as FILEABC) |

Files and images on server10 Unique Images200 Image SeeksMedium Quality Images: 8.5" by 11" documents scanned at 150 DPI in TIFF format non-color images (Same as IMGTEST) |

|

Latency |

5-second latency period implemented to reduceacross-the-wire collisions |

No prescribed latency period because of internallag of the test bench |

No prescribed latency period |

|

Image Retrieval Paradigm |

One Image Per File(images treated as a file) |

One Image Per File(images treated as a file) |

One Image Per File(images treated as a file) *Other paradigms available |

|

Image Caching |

Cached in server memory |

No caching |

No caching |

Although the hardware used in testing differs slightly between the test benches, we have factored in the differences (for the purposes of this AppNote anyway) and have made test assertions regarding these minor differences.

We used the same image files in both the IMGTEST and FILEABC test benches. While we cannot make any claims as to the conversions of these images, we have noticed that the file sizes have remained relatively the same after conversion to the FILEABC test bench.

A final point to keep in mind when analyzing the graphical data presented in this AppNote is that our MUTEST tests were performed with 16-bit LAN drivers. Testing in the IMGTEST and FILEABC test benches was performed with Novell's Client32 software and 32-bit LAN drivers. However, the network adapters in the workstations were all 16-bit adapters. Our reasoning for running the 32-bit client with 16-bit adapters was to identify any differences in performance between 32-bit and 16-bit LAN drivers. We'll address these performance differences in future AppNotes.

Tracking Down New Bottlenecks

The traditional belief among imaging system implementors is that imaging systems (whether based on DOS or Windows) are primarily limited by server-specific issues such as network traffic and CPU utilization. We have found this to be true in several cases, specifically when coalescing a large number of clients to maximize the usage of the server's resources. In our earlier test studies, we noted that the maximum number of imaging users on a DOS platform is around 100 users, whereas for Windows the number is around 25 users. (Note that we're talking about a practical maximum which pushes the server as fast as it can go without sacrificing performance or overutilizing the server's valuable resources.)

When analyzing new technological implementations, it is easy to infer that most performance problems lie in current hardware and software technology, not in the testing schema. To the contrary, we have concluded that the base operating system plays a significant role in the processing of networked image retrievals. However, it is often overlooked that the combination of the operating system and the type of image retrieval system deployed actively contributes to network performance.

Differences in the Windows Environment

One of the main attractions of Windows early on was that it provided a more-or-less consistent platform for developing applications. Unfortunately, applications in Windows are not always treated consistently. This holds true even for image retrieval products. Before any system integration can take place, users must thoroughly analyze their needs against the product's features and capabilities.

Some issues that seem trivial, such as whether the imaging system is based on compiled code or interpreted code, actually play a significant role in network performance. As basic as this may seem, it is a critical issue that is often overlooked when analyzing image retrieval systems. To understand the effect that the coding paradigm can have on imaging performance, let's look at some of the older principles used in analyzing the "original" image retrieval deployment schemes.

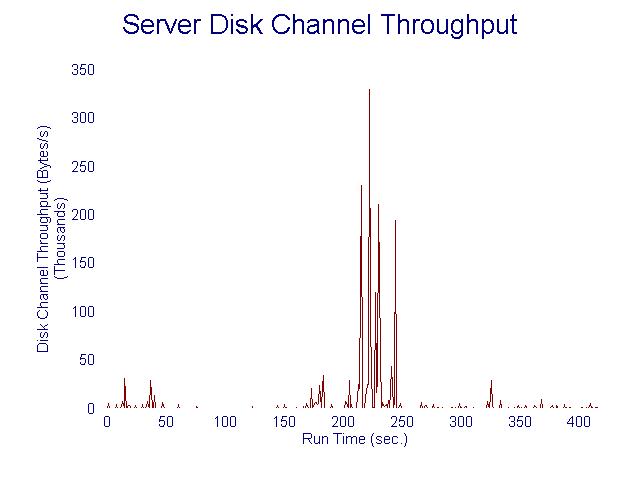

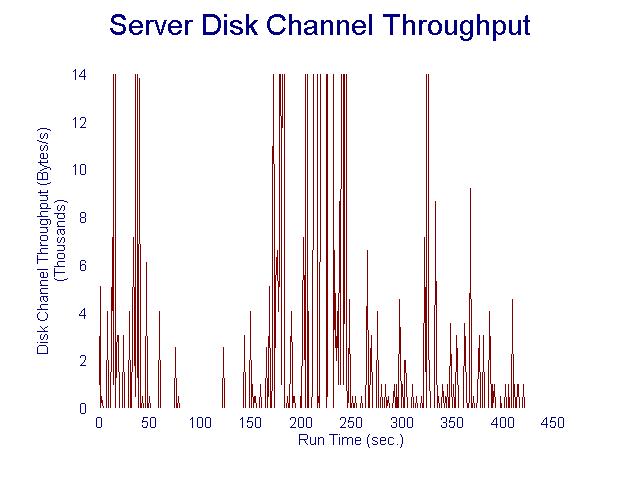

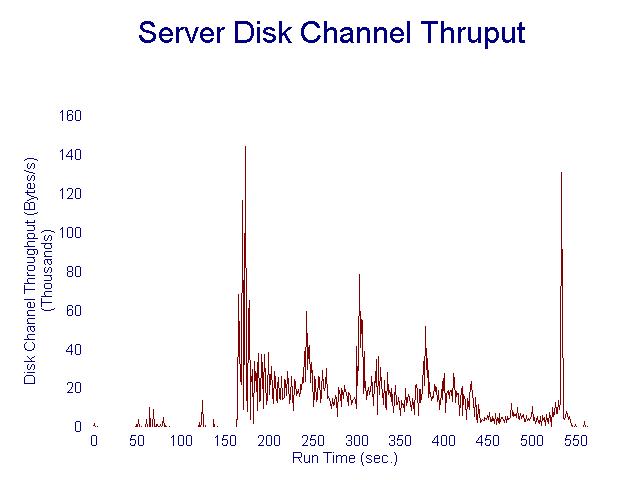

Disk Channel Throughput. Figures 1, 2, and 3 compare the disk channel throughput in each of our three imaging test benches.

Figure 1: Server disk channel throughput test results for MUTEST. results for MUTEST.">

Figure 2: Server disk channel throughput test results for IMGTEST. results for IMGTEST.">

Figure 3: Server disk channel throughput test results for FILEABC. results for FILEABC.">

Looking at the MUTEST and IMGTEST graphs alone, it is easy to infer that the Windows operating system is the main contributor to the extended disk activity. However, a direct comparison of the IMGTEST and FILEABC graphs shows an extreme difference in disk channel activity. IMGTEST and FILEABC are both running under Windows. While the general appearance of the graphs looks similar, the graph in Figure 3 shows that the actual disk channel throughput for FILEABC is much lower than that of the object-oriented IMGTEST test bench graphed in Figure 2.

Now let's "zoom in" on the MUTEST disk channel throughput graph (Figure 4), which shows its bursty nature in DOS.

Figure 4: Closer view of server disk channel throughput for MUTEST. channel throughput for MUTEST.">

Comparing this data with the FILEABC graph uncovers an interesting similarity. The average peaks of the disk channel throughput in the FILEABC test bench are more similar to the MUTEST (DOS) test bench than the IMGTEST (Windows) bench. From this we conclude that while the operating system itself plays a significant role in the functionality of the test bench, it is not the only critical area for analysis.

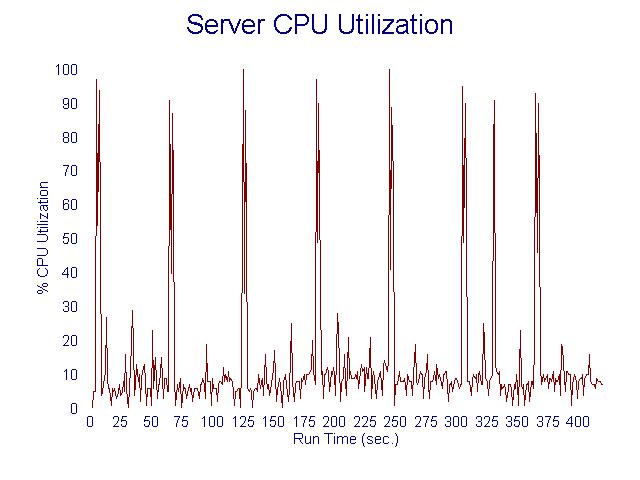

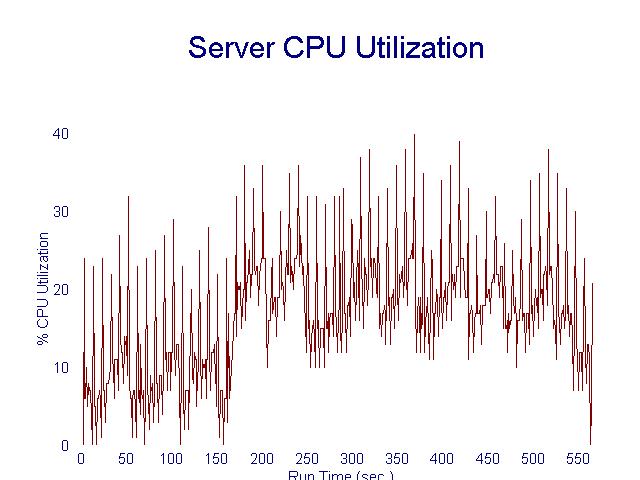

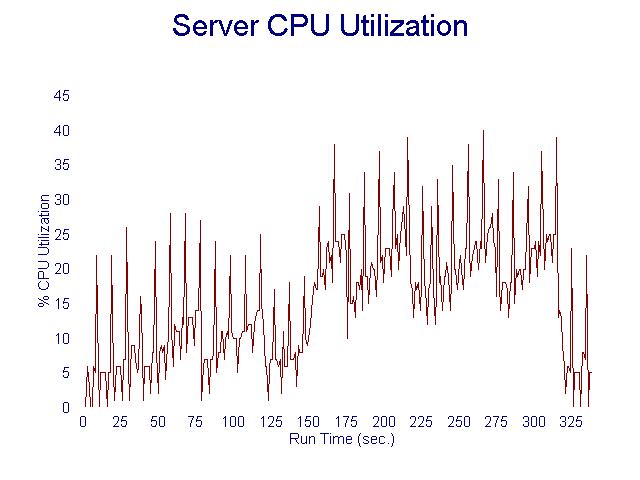

CPU Utilization. It is no secret that the Windows operating system itself taxes the server's CPU more significantly than does the DOS environment. Figures 5, 6, and 7 show the CPU utilization for each of our imaging test benches.

Figure 5: Graph of server CPU utilization for MUTEST. for MUTEST.">

Figure 6: Graph of server CPU utilization for IMGTEST. for IMGTEST.">

Figure 7: Graph of server CPU utilization for FILEABC. FILEABC.">

In Figure 5, we see that CPU utilization is bursty in the DOS environment. However, the overall median of the CPU utilization is much lower than in either of the Windows-based test benches, even after factoring in the high peaks from the "abnormal" bursts. Comparatively, the CPU utilization graphs for IMGTEST and FILEABC (Figures 6 and 7) look very similar.

On the face of it, one might conclude from this that Windows is the significant factor causing the relatively higher CPU utilization on the file server. However, it is important not to focus on one factor alone in analyzing a system's performance. All system components, including the operating system and the test bench itself, play a significant role in networked imaging retrieval environments.

Note the run times on the two Windows test benches. To properly analyze these numbers, we must understand where each test bench was initialized. In both IMGTEST and FILEABC, the test bench was initialized at approximately 150 seconds. (We can assert this by looking at where the CPU utilization "consistently" stays above the 10 percent mark.) In IMGTEST, the test ran for approximately 400 seconds, whereas the FILEABC test took approximately 175 seconds. This is a significant difference for image retrievals.

Compiled vs. Interpreted Code

The key difference between the two Windows test benches is that FILEABC is executed from compiled code, whereas IMGTEST is an interpreted-code test bench. What this essentially means is that the code for IMGTEST is not as compact as the code for FILEABC.

Various function calls are dispersed throughout the image-retrieval batch file. In an object-oriented test bench such as IMGTEST, the introduction of DLLS (Dynamic Link Libraries) and other supporting files creates several diversions to the performance of the image retrievals. Instead of retrieving a raw image and displaying it to each client, the object-oriented code must periodically refer to its batch file between every function call necessary to complete its task. This batch file can become intricate to the point where seeks are made for the several .DLL files, image files, and function calls within the batch file itself.

Thus, a task that may take one function call in a compiled-code test bench can easily see a ten-fold increase in an object-oriented test bench. Not only is the number of routines increased with the usage of an object-oriented test bench, but a slight amount of overhead is placed on each image as it comes across the wire. In a mass image retrieval situation, this could mean serious performance delays.

This does not necessarily mean that the object-oriented test bench should be completely abandoned. Some advantages become readily apparent upon implementing an object-oriented image retrieval system. However, as noted in this AppNote, the advantages of compiled-code tend to outweigh those of an interpreted-code imaging system.

Advantages of Compiled Code

What are some distinct advantages of compiled-code imaging systems? First, as illustrated earlier, the disk channel accesses are lower with compiled code. What this means to the user is that less "redundant" activity is being traced back to the server, which in turn allots more system resources to the user rather than to the specifics in the imaging system.

Second, the CPU utilization appears lower in a compiled-code environment than in an interpreted-code environment. Reduction in the use of server resources such as the CPU allows for more efficient and effective image retrievals to the user. The more server resource utilization can be reduced, the fewer the chances that "systematic" network obstacles will occur. To the consumer, this also means that hardware costs will be effectively reduced because the need to upgrade server resources to keep up with technology is not as high as it would be in the object-oriented imaging environment.

Another advantage to compiled code is that actual access times are reduced. Hence, image retrievals occur much more efficiently than in an object-oriented imaging system.

Advantages of Interpreted Code

In fairness, we must also take into account some advantages of an interpreted-code imaging system. To do this, we need to introduce graphs of the error rates that occurred as images were being transmitted across the wire. For the sake of comparison, the focus of our attention will be placed on the error rate anomalies in the IMGTEST and FILEABC test benches (see Figures 8 and 9).

Figure 8: Graph of error rates in the IMGTEST test bench. in the IMGTEST test bench.">

Figure 9: Graph of error rates in the FILEABC test bench. in the FILEABC test bench.">

Although in the FILEABC test bench each client is performing fewer function calls to the server, the error rates are higher than in the IMGTEST test bench. To understand why, we need to discuss the effects of latency.

Latency. IMGTEST sends several function calls to the server per request of each client. Since several requests are being made to the same file, the server must schedule the time it allows for each client to access specific files on the server. The extended activity at the server creates a latency period for the client while it is waiting for its turn to access the needed information. In addition, while each client is "waiting its turn" to access the next piece of data from the server, internal function calls will be made within each client to condition its operating system for the next image retrieval. This delay staggers the client/access load of the server just enough to reduce the chance of multiple collisions as data is being transmitted down the wire.

Although the compact code in FILEABC creates a comfortable environment for image retrieval speed, the error rates depicted in Figures 8 and 9 provide excellent statistical justification for a latency period in any Windows-based image retrieval system. FILEABC performs so efficiently that the server can only stagger its file access requests fractionally, to the point where it is sufficient for the server/client load.

Unfortunately, this does nothing for the error rates across the wire. The lack of a latency period allows each client to send data requests simultaneously to the server in hopes that they will be the first to receive a response from the server. What's worse is the clients are performing the same tasks at the same time. In essence, this is like drag-racing down a one-lane road with several opponents travelling at the same speed. Unless the client's data requests are staggered, multiple collisions will result.

In the event that a client's request reaches its destination ahead of schedule, the server will unconditionally send its response back to the client without realizing that its data is racing in opposition to the flow of traffic. This creates several problems, especially with line utilization and error rates. The fact is, a compiled-code test bench will attempt to retrieve its images in rapid succession across the LAN channel for a single-client, disregarding the time scheduling needs of other workstations.

Although a compiled code imaging system provides several benefits terms of speed on single-client basis, the reduction of errors will collectively improve the efficiency of the image retrievals for all workstations. Next we'll se how a latency period not only reduces the chances of across-the-wire collisions, but it also improves line utilization statistics significantly.

Line Utilization. As mentioned earlier, a test bench running under interpreted code (such as IMGTEST) causes an internal latency for each client that is calling the test bench. This latency period is uncontrollable in that it cannot be reduced or removed. The latency created in IMGTEST is a product of the multiple function calls and references that the client sends to the server. However, as shown in Figures 8 and 9, this latency period actually results in lower error rates.

Another benefit of the internal latency period for an object-oriented test bench is illustrated below with reference to line utilization. Figures 10 and 11 show the Ethernet line utilization for the IMGTEST and FILEABC test benches.

Figure 10: Graph of line utilization for IMGTEST.

Figure 11: Graph of line utilization for FILEABC.

Again, it seems like an anomaly that line utilization is much higher in the compiled-code test bench than in the interpreted-code test bench. Logically, one might come to the conclusion that since the IMGTEST test bench provides more function calls and activity to the server, the line utilization for IMGTEST must be a lot higher than for FILEABC. Although this reasoning may prove true to some extent, most of the line utilization figures are relevant to the extent of the actual image retrievals rather than the function calls. In other words, these graphs depict the way the images are being handled as they travel through the wire, rather than being an exclusive portrayal of the images or the individual function calls.

The IMGTEST graph (Figure 10) traces the various function calls being made by the object-oriented code, a slight pause, and then the images sent "behind" the previous function calls. On the other hand, the FILEABC graph (Figure 11) only traces the function calls and the image retrieval calls while the image passes through the wire. Also note the periodicity factor of line utilization above the zero percentile mark.

Going back to our "run time" assertion, the IMGTEST results clearly show that an image retrieval completed in about 400 seconds, whereas in the FILEABC test the same image retrieval occurs in approximately 175 seconds. Essentially what this means is the same amount of data is being transferred across the wire; however, given IMGTEST's internal latency, the line is not being taxed as heavily because the data is being dispersed at intervals, rather than as one big "chunk" of data for the sake of performing the image retrievals as quickly as possible. Because of the lack of spacing between requests in FILEABC, the line utilization soars in this test bench. However, the shorter actual time to perform the tests compensates for that loss.

Conclusion

This AppNote has expanded our discussion of imaging system bottlenecks begun in 1992. It has presented two "new" bottleneck areas to consider: the base operating system and the coding paradigm of the imaging application. Neither of these factors is exclusive of the other. Our test results show that both the operating system foundation and the coding of the test benches contribute to performance of mass image retrievals in a networked system.

Two types of imaging systems are available for implementation: those based on interpreted code and those based on compiled code. As illustrated in this AppNote, integrating a compiled-code test bench provides several benefits. Although specific performance issues are relevant in terms of error rates and line utilization, the actual solution for these issues lies in integrating a latency period within the test bench.

The needs of the end-user must be the prime consideration when implementing a networked imaging system. For now, users must judge what performance issues will benefit them most in their particular environment.

* Originally published in Novell AppNotes

Disclaimer

The origin of this information may be internal or external to Novell. While Novell makes all reasonable efforts to verify this information, Novell does not make explicit or implied claims to its validity.